How it Works

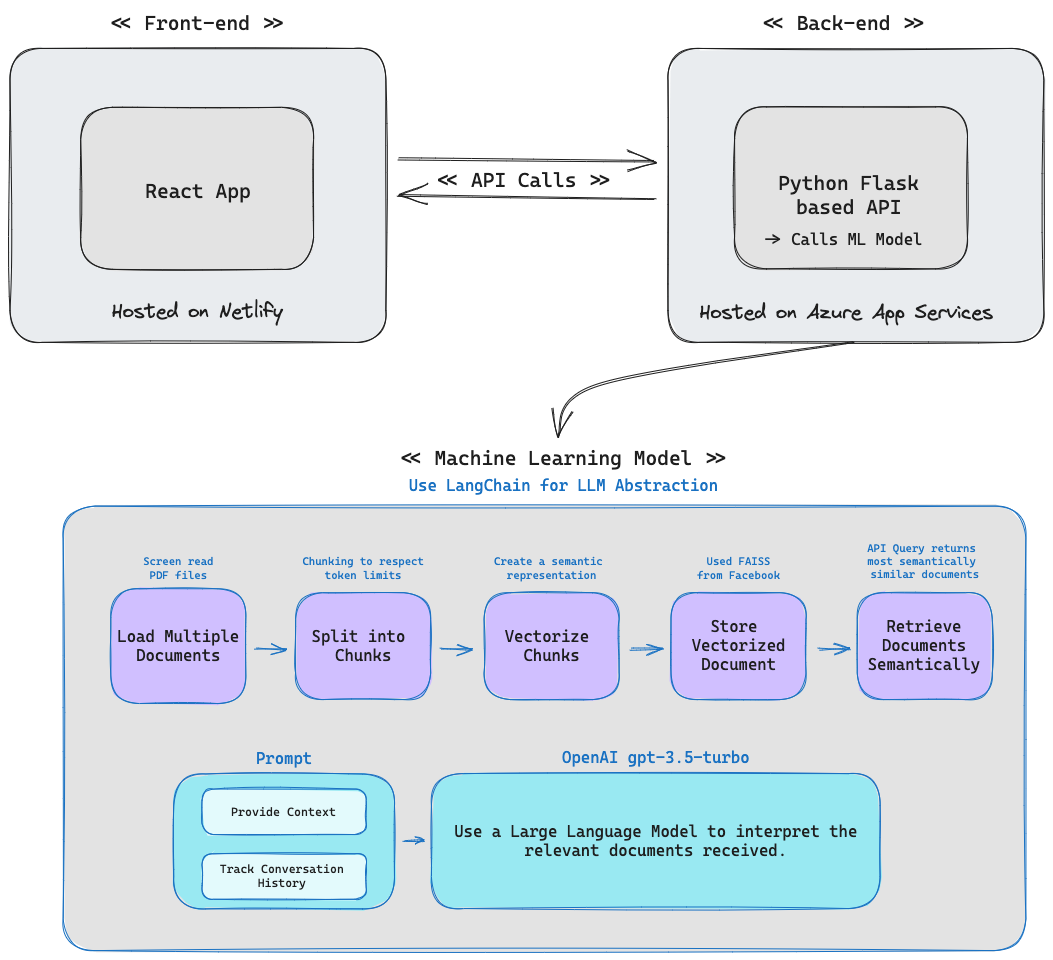

This chatbot uses a large language model (LLM) to generate responses to your messages. When you enter a message, the chatbot requests the back-end API I built.

The API queries a vectorized document database that is trained on finding the most relevant answers related to my professional career. That vectorized result is then fed to the LLM, along with a prompt to contextualize response style, to generate a response to your message. A history of your messages and the model's responses are fed back into the prompt to generate the next response while maintaining the overall context.

I used LangChain framework to vectorize the dataset and query the LLM model. Currently, I am using gpt-4o by OpenAI. This is the same generative AI model that powers ChatGPT. While ChatGPT was trained to answer general questions, this chatbot has been trained to answer questions related to me.

List of tools used to build this application:

Front-end:- JavaScript

- React

- Python

- Flask

- LangChain

- OpenAI

- FAISS

- Netlify for React app

- Azure for Flask app

- Github for continuous deployment